Children's charity the NSPCC has said a drop in Facebook's removal of harmful content was a "significant failure in corporate responsibility".

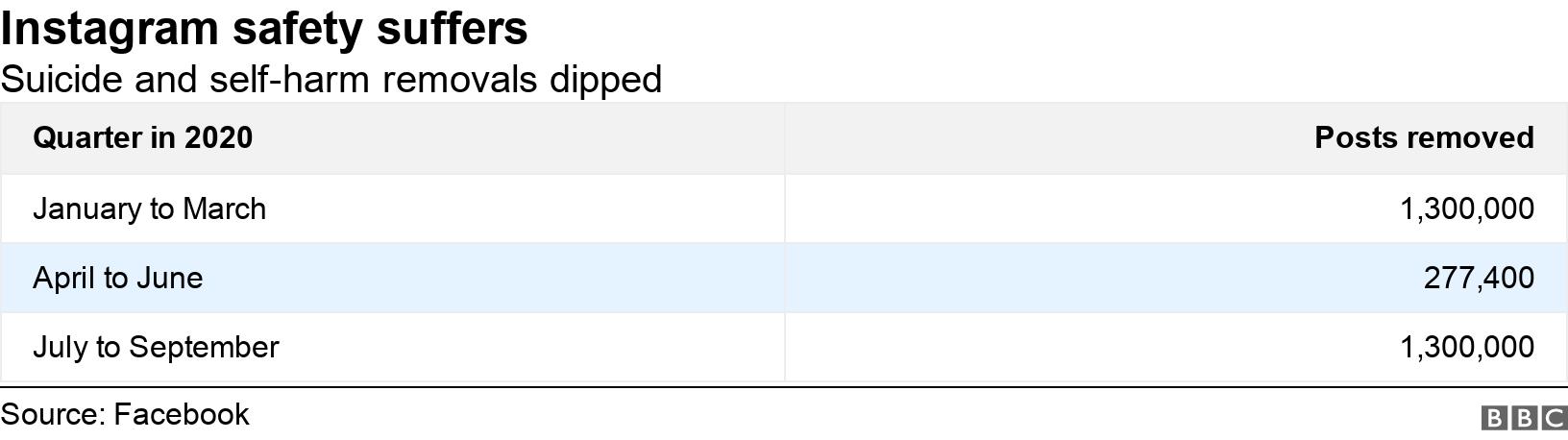

Facebook's own records show its Instagram app removed almost 80% less graphic content about suicide and self-harm between April and June this year than in the previous quarter.

Covid restrictions meant most of its content moderators were sent home.

Facebook said it prioritised the removal of the most harmful content.

Figures published on Thursday showed that as restrictions were lifted and moderators started to go back to work, the number of removals went back up to pre-Covid levels.

'Not surprised'

After the death of the teenager Molly Russell, Facebook committed itself to taking down more graphic posts, pictures and even cartoons about self-harm and suicide.

But the NSPCC said the reduction in takedowns had "exposed young users to even greater risk of avoidable harm during the pandemic".

The social network has responded by saying "despite this decrease we prioritised and took action on the most harmful content within this category".

Chris Gray is an ex-Facebook moderator who is now involved in a legal dispute with the company.

"I'm not surprised at all," he told the BBC.

"You take everybody out of the office and send them home, well who's going to do the work?"

That leaves the automatic systems in charge.

But they still miss posts, in some cases even when the creators themselves have added trigger warnings flagging that the images featured contain blood, scars and other forms of self-harm.

Mr Gray says it is clear that the technology cannot cope.

"It's chaos, as soon as the humans are out, we can see... there's just way, way more self-harm, child exploitation, this kind of stuff on the platforms because there's nobody there to deal with it."

Facebook is also at odds with moderators about their working conditions.

More than 200 workers have signed an open letter to Mark Zuckerberg complaining about being forced back into offices which they consider unsafe.

The staff claimed the firm was "needlessly risking" their lives. Facebook has said many are still working from home, and it has "exceeded health guidance on keeping facilities safe" for those who do need to come in.

The figures published on Thursday in Facebook's latest community standards enforcement report again raise questions about the need for greater external regulation.

The UK government's promised Online Harms Bill would impose a statutory duty of care on social media providers and create a new regulator.

But it has been much delayed and it is thought legislation won't be introduced until next year.

'Let down'

Ian Russell, Molly's father, said there was a need for urgent action.

"I think everyone has a responsibility to young and vulnerable people, it's really hard," he explained.

"I don't think the social media companies set up their platforms to be purveyors of dangerous, harmful content but we know that they are and so there's a responsibility at that level for the tech companies to do what they can to make sure their platforms are as safe as is possible."

The NSPCC is more forthright.

"Sadly, young people who needed protection from damaging content were let down by Instagram's steep reduction in takedowns of harmful suicide and self-harm posts," said Andy Burrows, the charity's head of child safety online policy.

"Although Instagram's performance is returning to pre-pandemic levels, young people continue to be exposed to unacceptable levels of harm.

"The government has a chance to fix this by ensuring the Online Harms Bill gives a regulator the tools and sanctions necessary to hold big tech to account."

Last week, Instagram announced it was deploying new software tools across the EU that would lead to more automatic removals of the worst kind of content .

Facebook said "our proactive detection rates for violating content are up from the second quarter across most policies".

It put this down to the development of AI tools that have helped it detect offending posts in a wider range of languages.

from Via PakapNews